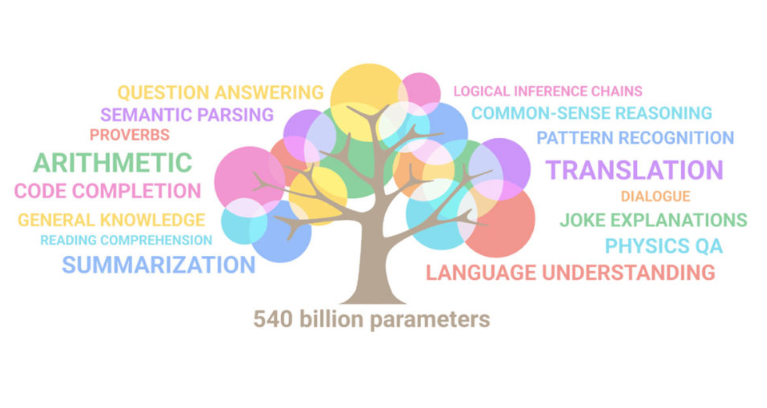

Timeline of Natural Language Processing over the years

Published:

NLP Models Timeline

- 1950s: Early rule-based systems for natural language understanding and generation

- 1960s: Hidden Markov Models (HMM) for part-of-speech tagging and speech recognition

- 1980s: Latent Semantic Analysis (LSA) for information retrieval and text classification

- 1990s: Recurrent Neural Networks (RNN) for language modeling and machine translation

- 1990s: Decision Trees for language understanding and generation

- 2000s: Convolutional Neural Networks (CNN) for text classification and sentiment analysis

- 2000s: Support Vector Machines (SVMs) for text classification and sentiment analysis

- 2010s: Word embedding models such as Word2Vec and GloVe for representation of words in vector space

- 2010s: Recurrent Neural Network Language Models (RNN-LMs) for language modeling and machine translation

- 2010s: Attention-based models for machine translation, such as Google’s Neural Machine Translation (GNMT)

- 2016: Generative Adversarial Networks (GANs) for text generation

- 2017: Transformer Models such as BERT, GPT-2, and RoBERTa for language understanding and generation

- 2018: Transformer-Xl, which is an extension of the transformer architecture for longer context language modeling

- 2019: XLNet, which is a permutation-based variant of the transformer architecture

- 2020: Pre-Trained models like T5, CTRL, etc, which are pre-trained on massive amount of data

It’s important to note that the timeline is not exhaustive, and many other models and techniques have been developed over the years, each with its own strengths and weaknesses. Additionally, the field of NLP is constantly evolving, and new models and techniques will continue to be developed to handle increasingly complex tasks.

Leave a Comment